Was looking at some of the new tooling and frameworks around enabling Agents to generate UI. Just text-based becomes limiting. With agents being able to render more complex UI, across multiple platforms you could even end up running Doom right in your chat experience: https://x.com/rauchg/status/1978235161398673553?s=20

Google just released an open-source A2UI sdk to enable this. But there are others too. I was taking a look at MCP Apps (formerly MCP-UI). Ostensibly, these frameworks overarching goal is to enable a richer experience via chat interfaces. A lot of today’s interactions have been limited to primarily text only, but with MCP Apps and others this is changing. It allows the MCP Tool to now define UI components to render back to the calling client, in a standard way that the client can then render. This could start to empower AI agents to be able to generate their own UI, on the fly. Seems like a cool concept and it opens up several more rich experiences.

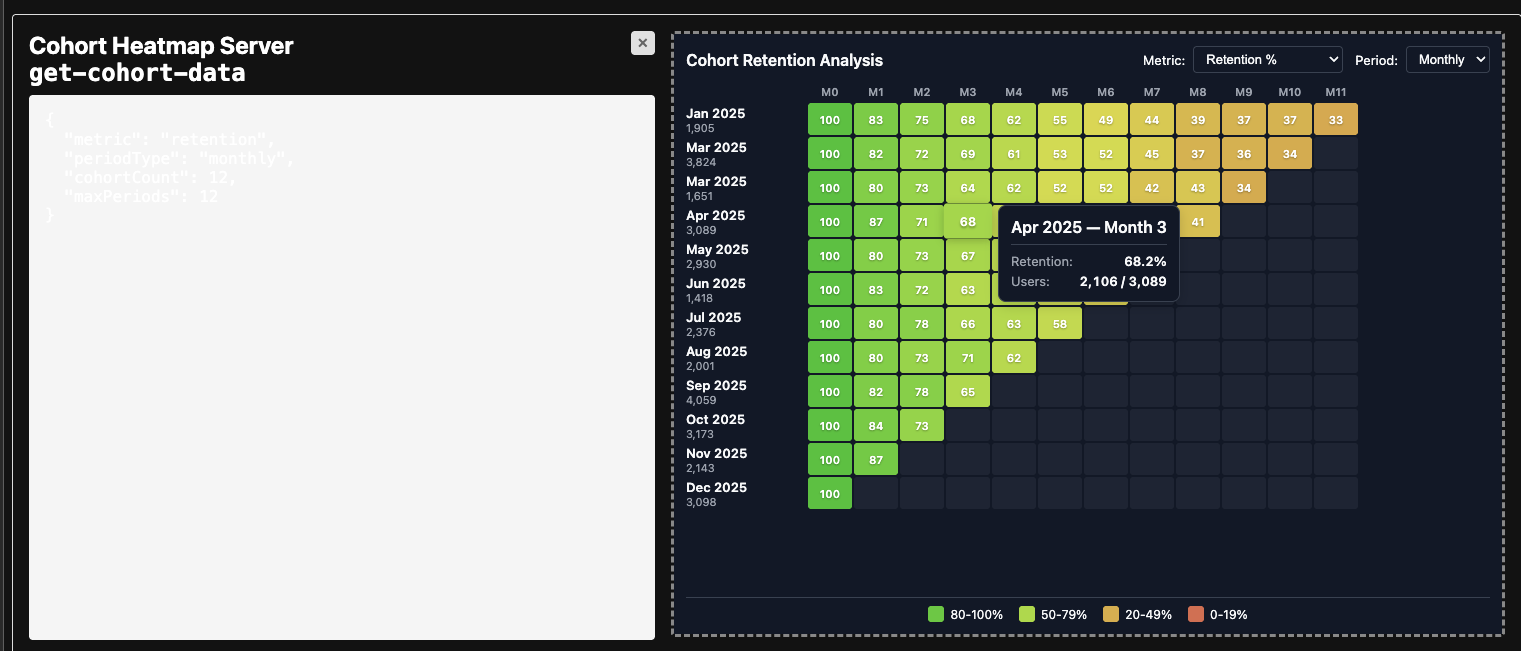

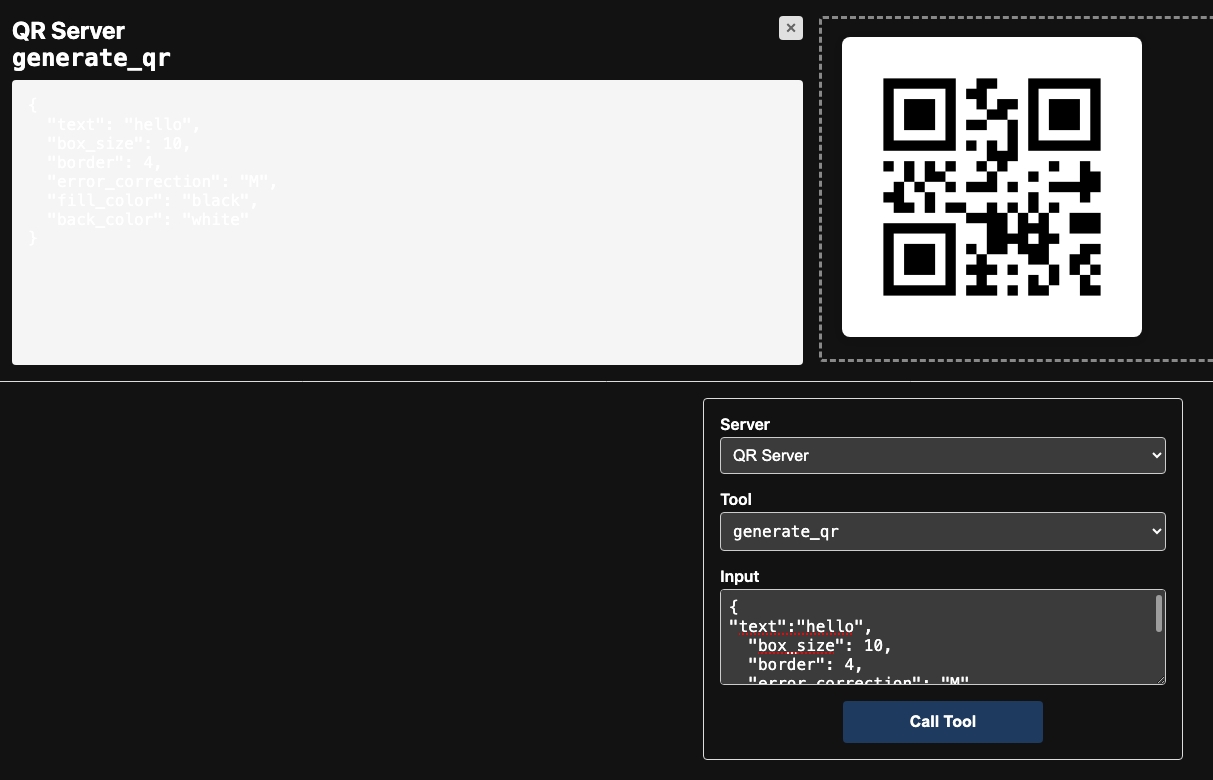

The examples with MCP Apps are a great starting point. Here are a couple I tried out - a nice interactive heatmap visual and a qr image render.

MCP Apps heatmap

QR code generation